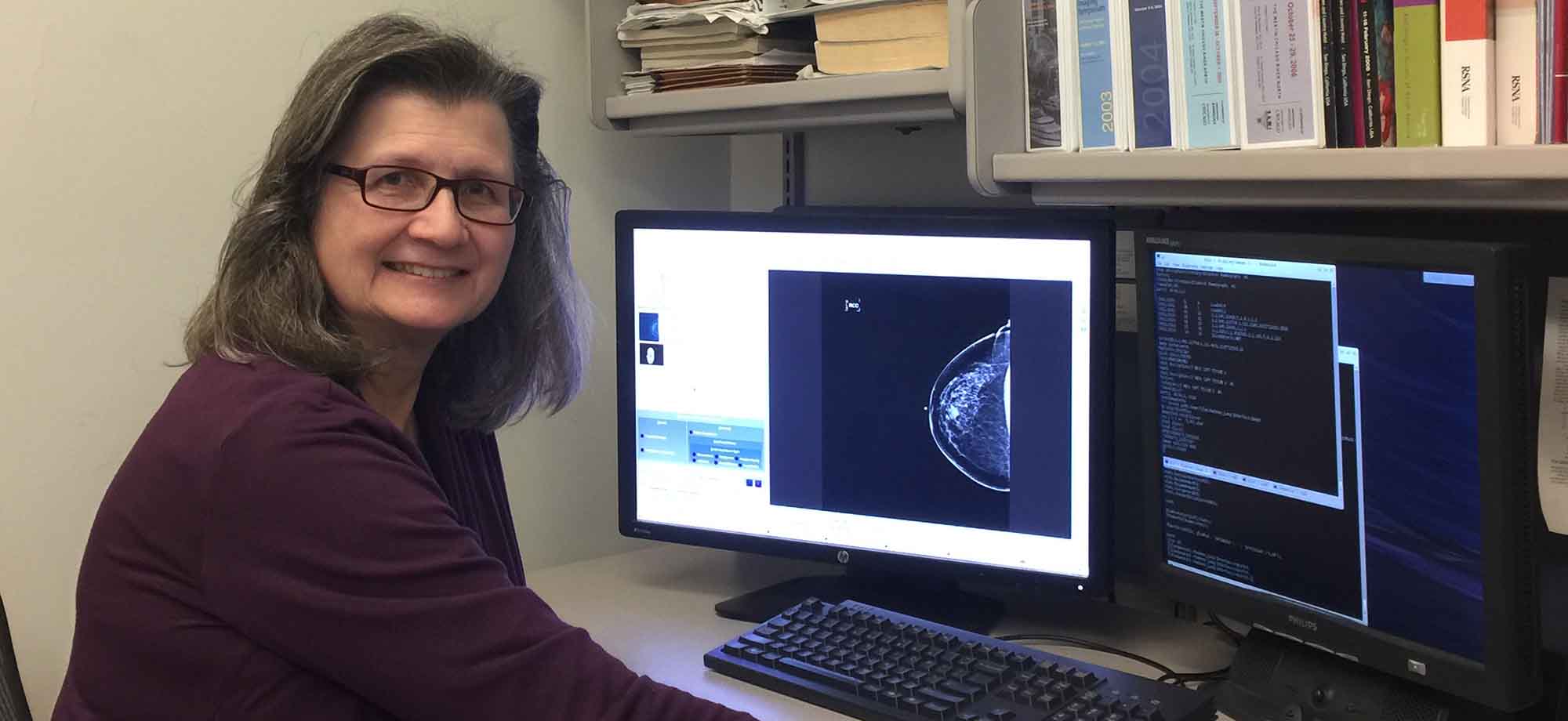

Since the beginning of her career, Maryellen Giger, PhD’85, has worked to use artificial intelligence for more accurate interpretation of medical images. (Photo courtesy Maryellen Giger, PhD’85)

Professor and entrepreneur Maryellen Giger, PhD’85, brings computer-aided breast cancer detection and diagnosis from bench to bedside.

Anyone who has undergone cancer screening knows the uncertainty and difficulty—financial, logistical, and emotional—of the process. Every year, I spend an anxious week repeatedly logging into a patient portal looking for test results from my routine mammograms. Decades ago, when I had a diagnostic mammogram for an ultimately benign lump, negative results came as a nondescript postcard in the mail. Yet both portal and postcard are preferable to the dreaded phone call—what many patients receive when the scan isn’t definitively negative, and they have to come back.

Definitive can be hard to come by. Sometimes dense tissue makes it difficult to see the entire breast. Sometimes the mammogram reveals a suspicious area that warrants a closer look. In these cases, the physician will order additional testing, to get a clearer image or to diagnose what the first test detected—more mammograms, an ultrasound, or an MRI. The extra imaging helps figure out if there are hidden masses and whether they’re likely to be cancerous. A patient might then undergo a biopsy. If it confirms cancer, more imaging might be used to evaluate the extent of the cancer, plan treatment, or assess response to treatment in lieu of repeated biopsies. With better breast imaging, radiologists could find, classify, or rule out cancer sooner—reducing anxiety, medical risk, and costs for millions of patients.

This is the goal that drives the work of medical physicist Maryellen Giger, PhD’85. Giger, the A. N. Pritzker Professor of Radiology, the Committee on Medical Physics, and the College, is a pioneer of artificial intelligence (AI) in cancer imaging. For decades she and her fellow medical physicists have been developing artificial neural networks to join forces with old-fashioned human intelligence in the cause of revolutionizing radiological imaging and analysis.

Imaging is crucial—first to screen for potential cancer and then to help diagnose what’s found. Each of these steps depends on a different type of radiological picture, and each is only as good as those pictures. How clear and complete are they? How noticeable do they render abnormal areas? The technology is continually advancing, from the earliest X-rays—created accidentally by shooting electricity through a vacuum inside a glass tube—to ultradetailed MRIs that require no contrast agents in development here at UChicago.

Once the images have been captured, they need to be interpreted. This is where Giger’s work comes in. Right now, medical images are mainly read by radiologists, whose work is as much art as science. Over their careers, they learn that this kind of spot is usually a cyst, while that kind of spot is often a tumor. And they learn that a tumor that looks like this usually ends up being benign, while one that looks like that is probably malignant. These are qualitative judgments.

But a digital image holds more information than even the most experienced human eye can appreciate. Exactly how irregular—quantitatively—are the margins of that tumor? What’s the actual volume of that mass? A human radiologist can estimate, but a computer can calculate. Artificial intelligence algorithms can reveal and analyze such hidden data, supporting the radiologist’s initial evaluation and helping the clinician make decisions. This assistance could do wonders, potentially reducing the number of false negatives (missed signs of cancer), false positives (harmless areas deemed suspicious), and unnecessary biopsies. But someone needs to teach the AI how to see and what to look for.

Giger sketches out her research plans on a notepad at her desk in the radiology department at UChicago Medicine, during one of her respites from traveling this past winter. She has just returned from San Francisco, after being in Washington, DC, the week prior, and will be heading to Houston the week after for conferences in medical imaging and optics.

She starts at the beginning, explaining the difference between detection (screening) and diagnosis using the Where’s Waldo? books. “Detection is finding things red-and-white striped. Diagnosis is saying the red-and-white thing is Waldo.” Now imagine a thousand-page book—each page an X-ray, ultrasound, or MRI—and Waldo’s cancerous presence is on only four pages. The American Cancer Society estimates 279,100 new cases of breast cancer will be found in 2020, so that’s like searching 70 million pages for the concealed character.

But if a computer, through artificial intelligence, can be trained both to spot stripes and then to decide if they’re an umbrella, a beach towel, or in fact Waldo, radiologists can catch and clinicians can treat disease earlier. This is what Giger has been working on for over 30 years.

Giger’s research is built on computer vision, a field of AI that trains computers to “see”—to identify, interpret, classify, and then react to visual images. That includes photographs and videos, and in Giger’s case, radiographs and other medical images such as MRIs and CTs.

Studying physics, math, and health science as an undergraduate at Benedictine University, Giger spent summers at Fermi National Accelerator Laboratory. She was drawn to medical physics, which uses physics and math to understand biomedical processes and diagnose and treat diseases. A career in medical physics, she thought, had the potential to affect society in her lifetime.

Medical physicists usually pursue either imaging science or radiation therapy. Giger chose imaging because she’s always relished analytical challenges. When she was a medical physics graduate student at UChicago in the early 1980s, radiology was transitioning from X-ray screen-film radiography (similar to photographic film) to digital imaging. She worked on basic properties in digital radiography, like pixel size, resolution, and image noise. This work is the basis of quantitative imaging: extracting quantifiable characteristics from images to help assess the nature of what’s being shown.

In the 1980s and ’90s, Giger collaborated with other radiologists and imaging scientists in the Department of Radiology to establish the field of computer-aided detection. CAD is AI that uses computer vision to review medical images, providing a second set of “eyes”—a second opinion as an aid to the radiologist. Giger’s team formulated concepts and designed algorithms using machine learning, which includes systems that learn from data (large, anonymized sets of tumor images from diverse populations), identify patterns, and make decisions with minimal human intervention.

To train such systems, scientists must use medical images that are already confirmed by pathology to be cancerous or not. These are converted into minable data: calculations of features like size, shape, or texture. Such metrics are correlated with cancer presence and progression, determined through clinical documentation or molecular and genomic testing. The study of the relationship between these computer-extracted features (i.e., radiomics) and the diseases they signify can be used in cancer discovery—adding to the foundational knowledge needed to advance cancer diagnosis, treatment, and prevention.

The machine learns from these relationships the way radiologists learn their craft: for instance, a digital image that shows a smooth oval lump is usually benign, and two successive images that show a measurable decrease in tumor size may mean a treatment is working.

CAD can be developed for use on X-ray, magnetic resonance, and ultrasound images, and not necessarily just for breast cancer. Giger has spent most of her research time on breast cancer imaging, but her projects have involved other types of cancer, including lung, prostate, and thyroid.

Breast cancer will cause an estimated 42,690 deaths in 2020 according to the American Cancer Society. That’s not a trivial number, but with earlier detection and better treatment options the mortality rate has declined over the past 30 years. For women, who have 99 percent of the cases, deaths are down 40 percent. (Lacking sufficient data on trans individuals, the ACS reports statistics and issues guidelines in terms of men and women.*)

Deep learning networks are part of the story of that decline. Emerging in the early 1990s, they enabled computers to learn directly from image data without human direction. At the time, Giger and colleagues were using the early technology to develop the first version of computer-aided detection (now called CADe to distinguish from computer-aided diagnosis, CADx, which came later). The system was initially developed for breast and lung images.

But searching detection mammograms for cancer isn’t as simple as scanning a picture for red-and-white stripes. X-rays are gray scale, after all. And of the nearly 40 million mammograms performed in the United States each year, about half show dense breast tissue, which can both increase breast cancer risk and obscure small masses, making them harder to see with the naked eye.

Giger’s lab develops pattern-recognition software that looks for visuals associated with cancer: lighter gray dense areas, possibly with radiating tissue patterns that suggest cancerous masses, and bright white spots that may be microcalcifications, small calcium deposits that indicate some underlying process happening in the breast. It’s sometimes harmless, but sometimes dangerous, like ductal carcinoma in situ, an early-stage cancer that may or may not become invasive. If the algorithm finds these patterns, it can flag questionable regions the radiologist might have missed.

In 1990 Giger, along with two other University of Chicago medical physicists, Kunio Doi, the Ralph W. Gerard Professor Emeritus of Biological Sciences, and Heang-Ping Chan, PhD’81, now a professor of radiology at the University of Michigan, patented their CADe method and system to detect and classify abnormal areas in mammograms and chest radiographs. Several more patents followed, and soon the patents and software were licensed by a company named R2 Technologies, which translated the research into a commercial product.

It took a few years of further development for R2 to create the first FDA-approved CADe system, called ImageChecker, to assist in the detection of breast cancer on digitized mammograms. By 2016 about 92 percent of breast cancer screening facilities were using a computer-aided detection system like this for mammogram second reads.

CADe is run on mammograms after the radiologist’s initial read, like a spellchecker. Having a second human radiologist do a double read, a common practice in Europe, has been shown to decrease the rate of false negatives, but at a high cost in money and labor. Computer-aided detection acts as that second reader; in fact, this is where R2 got its name. If the system misses a suspect region that the radiologist already noted, the human’s judgment overrides the computer’s. But the computer sometimes flags a region that the radiologist might have overlooked. In these cases, it’s up to the radiologist to decide whether that region should get further attention. CADe is meant to aid human evaluation, not supersede it.

Early in the development of the CADe algorithm, Giger thought, “We should give this away so that all could benefit.” But if scientists made basic research widely available, it might, paradoxically, be less useful. “There would be no economic incentive for a company to make it into a product,” she says, to “handle the expense of taking it through FDA and then making it clinically viable.” Commercialization moves research from bench to bedside, making it possible for a scientist’s ideas and developments to eventually help patients.

When a product that was initially developed by a UChicago faculty member is licensed, some of the funds from royalties and other payments are funneled back to the lab to continue the underlying basic research, and some goes to the inventors, the departments, the division, and then to the University’s technology transfer enterprise.

In 1980, the year after Giger came to UChicago, a new law changed how patentable inventions emerging from universities were handled. The Bayh-Dole Act made universities, rather than the federal governmental agencies that funded the work, “the default owner” of those inventions, writes Eric Ginsburg in a brief history of tech transfer at UChicago. Ginsburg, the interim director of technology commercialization at the Polsky Center for Entrepreneurship and Innovation, notes that the act also encouraged universities to commercialize and required them to share revenue with the inventors. In the following decade, the number of patents filed by universities quadrupled.

When UChicago created an internal office in 2001 to manage intellectual property and start-ups, Giger chaired the university committee that proposed its structure, scope, and policies. “We looked at what our peer institutions were doing,” she says, “and then developed our own recommendations to create UChicagoTech, which ultimately became part of the Polsky Center.

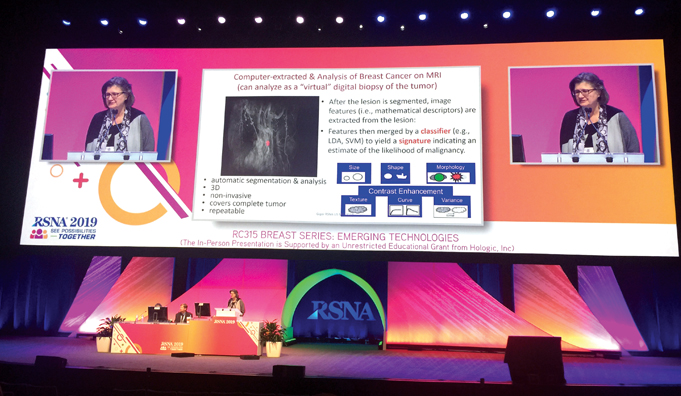

Last December TIME magazine published its list of the 100 best inventions of 2019. One of the 10 selections in health care was a product called QuantX, the first machine-learning-driven system cleared by the FDA that aids in breast cancer diagnosis, that is, CADx. QuantX analyzes diagnostic breast MRIs and offers radiologists a score related to the likelihood that a tumor is benign or malignant, using AI algorithms developed in Giger’s lab. The magazine cover sits on a bookshelf in Giger’s office.

It was cleared for market in 2017, when a clinical reader study—a kind of study designed to test the performances of two technologies against each other—showed a 39 percent decrease in missed cancer and a 20 percent improvement in accuracy.

QuantX makes a determination by “extracting” features of tumors revealed by diagnostic MRIs. A radiologist first manually marks the center of a tumor, telling the system precisely where to look. QuantX then automatically generates a 3D outline of the lesion, providing volume, diameter, and surface area measurements. The machine learning component of QuantX was trained to recognize the relationship between certain features and whether the lesion is cancerous or noncancerous. It looks at lesion size, shape, and other morphology, like whether it has an irregular margin. It also looks at characteristics enhanced by contrast agents used during MRI exams, which reveal information about texture and changes over time. The higher the score, the higher the probability that the tumor is malignant.

Back in her office, Giger starts up an earlier version of QuantX to show it in action. It takes fast computers to train the kind of algorithms within QuantX, but the system itself can run on an ordinary laptop. Magnetic resonance breast images occupy part of the screen; she demonstrates how a radiologist can use crosshairs to designate the center of the mass. The system then automatically outputs volume, diameter, and surface area. “See how fast?” Giger notes. “This was run in real time.”

To the right of the image section is a histogram, showing the distribution of tumor types in similar cases to the one being investigated, like an online atlas. Each benign growth is in a green square and each cancer in a red square. She points to a pink arrow showing where the mass in question falls in comparison to the known database. “Computer’s suggesting: probably benign.”

About 10 percent of women who undergo screening mammography may go on to have diagnostic imaging, but only a few will actually have cancer. QuantX aims to help radiologists find cancerous lesions while identifying women whose tumors are most likely benign, saving some of those women from invasive biopsies.

In Giger’s UChicago lab, the prototype workstation that led to QuantX is being investigated to help patients who have been diagnosed with cancer. During treatment, they may receive periodic MRIs that are then run through the workstation as a “virtual biopsy,” to evaluate the treatment’s progress without needing repeated physical biopsies. When asked whether such a workstation will replace traditional biopsies, Giger says no. “We aim to use a virtual biopsy when an actual biopsy is not practical.”

Currently UChicago Medicine and the University of Texas MD Anderson Cancer Center are evaluating QuantX for use in radiology and radiomics research. The automatic segmentation saves time and the system’s ability to quantify so many tumor characteristics and output a likelihood score provides useful data for future projects.

QuantX took the first step in its journey from lab to market in the 2009–10 academic year in collaboration with the Polsky Center, UChicago’s innovation hub. One of Polsky’s accelerator programs is the Edward L. Kaplan, MBA’71, New Venture Challenge—a competition to help start-up businesses get off the ground.

After hearing Giger present her work at a Polsky Ideation Workshop in November 2009, Brian Luerssen, MBA’11, and James Krocak, MBA’11, teamed up with Giger and Gillian Newstead, former chief of breast imaging at UChicago. They formed a company named Quantitative Insights, which was a finalist in the 2010 New Venture Challenge. (Giger notes that she first entered the competition back in 2004, but during one presentation round everyone was so excited about the science that they ran out of time before discussing the business plan. She didn’t make that mistake again.)

After Quantitative Insights placed in the NVC, it joined the Polsky Incubator, which provides space, mentorship, and support for growing a sustainable business. E. J. Reedy, senior director of the Polsky Exchange, explains that while the New Venture Challenge is a sprint, the incubator is a marathon, with residency usually lasting a couple of years. Quantitative Insights had “a longer cycle than the typical company,” says Reedy. It “tends to take a little bit longer for some of the particularly science-based companies to find their footing in the market.”

While in the incubator, Quantitative Insights developed QuantX, took it through clinical reader studies, and gained clearance through the FDA’s de novo process for first-time technologies with “no legally marketed predicate device.” It “graduated” out of Polsky last year when Paragon Biosciences acquired it and created the company Qlarity (pronounced as “clarity”) Imaging to continue the commercialization of QuantX.

The FDA approves products for a narrow scope of uses. QuantX was cleared to evaluate MRIs for a tumor’s likelihood of being benign or malignant. Qlarity’s Meg Harrison, the chief operating officer and head of product, says the company wants to develop QuantX to support all types of breast imaging: MRI, ultrasound, mammography, and tomosynthesis. Each of those applications will need its own FDA clearance. The next step will be to make QuantX work with diagnostic ultrasounds.

While the companies built on Giger’s work continue product development, she pushes her research in more directions. QuantX is designed to generate a benign-malignant signature, but the “guts” of Giger’s technology can do more. For instance, if different tumor features were weighted differently, that signature might be used to predict risk of recurrence, says Giger. And the imaging big data that underpins CAD can also be applied to develop predictive models, for use in prognosis and therapeutic response.

Currently Giger is looking at several lung diseases using low dose CT scans. (Breast cancer is the second-highest cause of cancer death in women; lung cancer is the first.) She also received a shared instrument grant at the end of 2018 for an extremely fast high-performance computer system, required to handle large datasets and train deep learning networks. “It can potentially go up to 1.9 petaflops,” she says. That’s 1.9 quadrillion operations per second.

In April her team was also awarded one of three inaugural seed grants from the C3.ai Digital Transformation Institute, a consortium “dedicated to accelerating the socioeconomic benefits of artificial intelligence.” The grants support the use of AI to mitigate COVID-19 and future pandemics; Giger’s team aims to develop machine intelligence methods to help interpret chest X-rays and CTs, which can aid in the triaging of COVID-19 patients.An overarching goal for Giger, one she shares with the UChicago radiology department, is to use radiomics from digital imaging to advance precision medicine. In her vision, data from millions of anonymous patients will be used to customize health care to each individual person: detecting and diagnosing disease early and giving the right patient the right treatment at the right time.

* Gender inclusive cancer reporting is complicated by explicit and implicit bias, a lack of culturally competent training in medicine, and limited patient data. For a broader discussion about the health care challenges LGBTQ+ patients face and how cancer care can become more inclusive, read this American Cancer Society fact sheet (PDF).