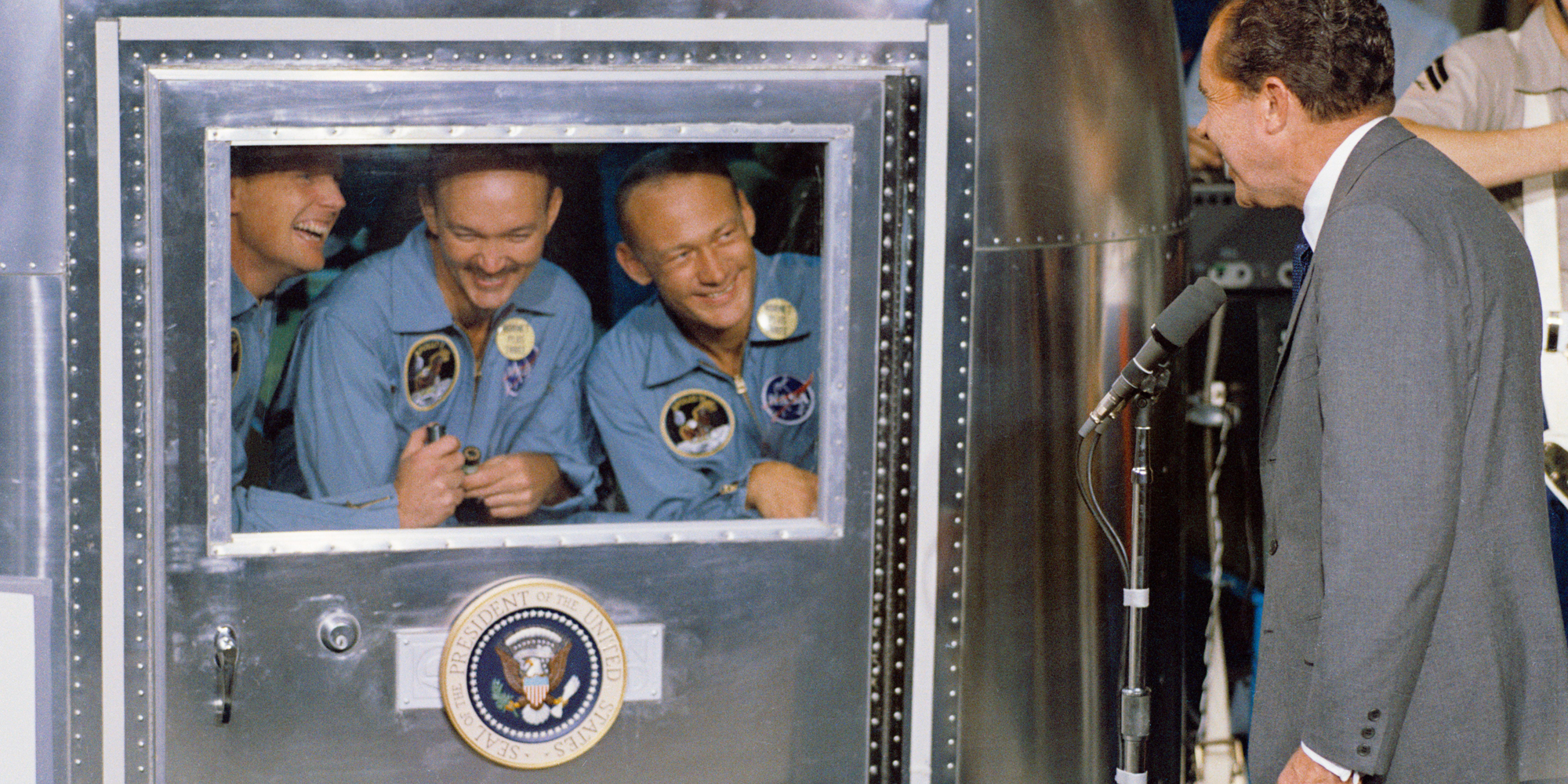

Hello from the other side: The Apollo 11 astronauts spent three weeks in quarantine to avoid contaminating Earth with extraterrestrial material. They greeted President Richard Nixon from inside the Mobile Quarantine Facility—a converted Airstream trailer. (Photo courtesy NASA)

The many lives of quarantine.

In October 2019 journalists Geoff Manaugh and Nicola Twilley observed a disease outbreak simulation cohosted by the Johns Hopkins Center for Health Security. The players included leaders in business, public health, and government. The fictional crisis was sparked when a coronavirus jumped from pigs to humans, quickly unfurling into a global pandemic. Inside the exercise, medical equipment was in short supply, financial markets crashed, and conspiracy theories flourished online.

Event 201, as it was termed, concluded with a cascade of recommendations. Organizers called for more government funding to support the development and manufacture of medications and vaccines, increased efforts to combat misinformation, larger stockpiles of personal protective equipment (PPE) and other essential medical equipment, and more.

Nowhere on the list was a discussion of quarantine—when to use it, how to enforce it, or what it would look and feel like for the people experiencing it. Yet just six months after Event 201, more than half the world was under some form of stay-at-home order.

It was a pattern Manaugh and Twilley, both AM’01, saw repeatedly while researching their forthcoming book, Until Proven Safe: The History and Future of Quarantine (MCD, 2021). Somewhere in the four pandemic simulations they attended, “you get to the point where it’s like, ‘OK, now we just quarantine.’ But nobody thinks about what that’s going to mean,” Manaugh says. Quarantine was either glossed over or treated as a switch that could be easily flipped on, rather than an ordeal people would have to live through, full of fear, confusion, and boredom.

Martin Cetron, director of the division of global migration and quarantine at the Centers for Disease Control and Prevention (CDC), had noticed the same thing in the planning exercises he attended: a tendency to focus on the beginning and end of the crisis and overlook the lived experience in between. As he told Manaugh and Twilley, “Nobody appreciates what the middle game is going to look like, but that’s the hard game.”

At the heart of quarantine is the idea that a community can, with distance and time, protect itself from a threat—even if it doesn’t understand the nature of that threat. Though the terms are often used interchangeably, even by experts, quarantine is technically distinct from isolation, which refers to the separation of people known to be sick from healthy people. Those in quarantine, by contrast, are currently healthy, but “we simply have reason to believe they may yet become sick,” as Manaugh and Twilley put it.

Indeed, long before widespread understanding of the germ theory of disease, our forebears had sussed out, through a grisly process of trial and error, that separating certain people and goods from others seemed to prevent illness from spreading.

It is both a simple and an ingenious idea—the realization that, as Manaugh puts it, “if we cut these things off, we’re safe. We don’t know what it is we’re safe from, but we seem to be in a position of security.” Quarantine is also, as Manaugh and Twilley write in Until Proven Safe, “an unusually poetic metaphor for any number of moral, ethical, and religious ills: it is a period of waiting to see if something hidden within you will be revealed.”

That combination of simplicity, complexity, and metaphoric power has kept Manaugh and Twilley, who are married, thinking about quarantine for more than a decade. Their interest was first piqued while visiting Sydney in 2009, when they heard about a nearby quarantine station that had been converted to a luxury hotel. The couple spent their seventh wedding anniversary at the resort, where, for over 150 years, newly arrived immigrants to Australia waited to be deemed safe or to fall ill, their journey’s end just out of reach.

For two former art history students with ongoing interests in the built environment, the idea of a quarantine facility living out its second act as a resort was appealingly odd. As they wandered around Q Station, they were struck by the knotty design challenges that quarantine presented—a space that is not a prison, but holds people; not a hospital, but may house the sick; near a destination, but not in it.

Quarantine also struck them as a necessarily interdisciplinary topic, understood best when examined from a variety of angles: medical, historical, architectural. “Interdisciplinarity is definitely our preferred way of looking at things,” Twilley says, something they came to realize as UChicago graduate students. (They met on their first day of orientation.) Neither wanted to be confined to a single field, but both realized they enjoyed research and writing. Manaugh, the author of the New York Times best seller A Burglar’s Guide to the City (FSG Originals, 2016), founded the architecture blog BLDGBLOG and teaches; Twilley writes for the New Yorker and cohosts the podcast Gastropod, which looks at food through the lenses of science and history.

When they first started studying quarantine, it seemed more like a relic of a past era than a harbinger of our collective future. This was not a fringe view, they later learned. Some public health experts the couple talked to dismissed quarantine as a mostly obsolete measure with only narrow usefulness in today’s global, interconnected world. Its costs and dangers, one World Health Organization leader told them, were simply too large to justify.

As Manaugh and Twilley investigated quarantine more deeply, they began to form a different view. “We went from thinking of it as an outdated medieval tool to something that was actually central to the functioning of the modern world as we know it,” Manaugh says. Though we don’t see it and name it as such, quarantine is everywhere, omnipresent and invisible.

Then, of course, it became all too visible. (Manaugh and Twilley abandoned their book’s original title, “The Coming Quarantine,” for obvious reasons.) They found themselves writing about quarantine while in quarantine, a surreal experience that reinforced the lessons of their research—that quarantine was central to our lives, that we had not prepared adequately for it, that it could and must be reenvisioned.

The first formal quarantine order, Manaugh and Twilley write, was issued in 1377 by officials in the bustling port city of Dubrovnik, Croatia, who required people and goods from plague-ridden areas to spend a month on the nearby island of Mrkan or in the town of Cavtat.

Dubrovnik eventually built lazarettos, or quarantine hospitals, to accommodate these travelers, as did other cities throughout Europe. In normal times, the lazarettos served as a way station for merchants and their goods; in times of disease outbreak, they housed the local population, for the purposes of either quarantine or isolation.

Some, like Venice’s Lazzaretto Vecchio, established in 1423, and Lazzaretto Nuovo, established in 1468, were built on close-lying islands so “the sick were separated from but remained closely tied to the city,” Manaugh and Twilley write in Until Proven Safe.

Early quarantines were “a civic project,” Twilley says—an effort undertaken collectively to provide reassurance, structure, and continuity. (Such community mindedness had its limits: throughout Europe, Jews and other marginalized groups were blamed for the Black Death, at times leading to genocidal violence.) They were also, of necessity, less isolated than today. At times, entire neighborhoods might be quarantined together.

In keeping with this collectively oriented approach, the Venetian state covered the costs of accommodation, food, water, and medical care for those in quarantine. During an outbreak, historian Jane Stevens Crawshaw writes, the city’s monks and nuns would pray for the health of the city for eight days straight. The lazarettos themselves were seen as a public good and an essential part of the fabric of the city—Venetian notaries were required to ask clients if they would like to leave a bequest to these institutions in their wills. “That attitude is kind of incredible,” Twilley reflects, an acknowledgment that “we need this for all of our protection, and therefore, we are all going to be asked if we want to donate to it.”

Over time, however, the communal experience of quarantine gave way to something more atomized. Lazarettos were renovated to include more private rooms—partly because officials saw health benefits, and partly because quarantine had begun to fracture along class lines. By the 19th century, when ships arrived at Malta’s busy lazaretto on Manoel Island, the wealthy passengers departed to quarantine in the relative comfort of the lazaretto; crew members remained on board, cramped and uncomfortable, after weeks at sea.

Only a handful of Europe’s lazarettos have been preserved in their original forms. (Some have been repurposed, but most were torn down.) Manaugh and Twilley, who have visited many of those that remain, see “a mournfulness to them,” Twilley says. She found herself wishing for a monument to everyone who had passed through, in honor of “the time, and in some cases, the lives that people had sacrificed to keep us all safe.”

The structures may have crumbled, but there are remnants of historic quarantine all over the modern world. The World Health Organization, for instance, has its origins in the 19th-century International Sanitary Conferences, meetings of European powers to develop standardized quarantine protocols across the continent. Modern passports have forerunners in fedi di sanitá, Italian documents from the 1500s certifying the holders had been declared infection-free, allowing them to move freely across national borders.

Borders themselves carry traces of infection control measures, Manaugh and Twilley write. Take the almost perfectly straight line separating Egypt from Sudan. The small, finger-shaped indentation in the border marks the location of a former British quarantine station designed to prevent the spread of the parasitic worm disease schistosomiasis. The cultural border between “East” and “West” was made literal in a network of quarantine stations along the edges of the Austro-Hungarian empire, with the aim of preventing Muslims returning from pilgrimages to Mecca from spreading diseases such as cholera.

Quarantine used to be a fact of life because disease was a fact of life. But for a brief period beginning in the 1950s, it receded in the public imagination, thanks to antibiotics and vaccines.

“We thought we’d won,” Twilley says. “We thought our science and technology and our advanced medical system had really put us beyond needing these types of countermeasures anymore.” In fact, when they started their research, “it was quite hard to find someone who’d been in quarantine” to interview.

Yet quarantine hadn’t disappeared. Instead, it took on new forms and flavors, as Manaugh and Twilley document in Until Proven Safe. The book includes chapters on efforts to isolate nuclear material and quarantine livestock and plants; one chapter narrates a visit to the International Cocoa Quarantine Centre near London, where different varietals of the pest-prone crop are cultivated and carefully monitored for infection before being released to growers around the world. The facility is designed to prevent a “chocpocalypse”—the near complete collapse of chocolate due to disease.

These applications of quarantine to nonhuman contexts underscore “how ubiquitous and multiscalar it is to begin with,” Manaugh explains. Quarantine is a logic for interacting with all kinds of uncertainty: “We’re putting things in liminal states, we’re putting things in buffers, we’re delaying our actual interaction with them until we figure it out.”

And what could be more uncertain than alien life? Until Proven Safe charts quarantine across planetary borders—a challenge that took on new urgency in the Apollo era. Although NASA’s engineers were fairly sure there was no life on the moon that could hitch a ride back to Earth, “fairly sure” was not enough for the CDC, the Department of Agriculture, and the Fish and Wildlife Service, whose leaders sought to prevent the small matter of an accidental hitchhiker eradicating all plant and animal life on Earth.

NASA worked intently to create quarantine protocols for the returning astronauts, their spacecraft, and their cargo of lunar rocks, ultimately modifying an Airstream trailer to serve as the Mobile Quarantine Facility for the Apollo 11 mission. When Buzz Aldrin, Neil Armstrong, and Michael Collins splashed down in the Pacific Ocean, they were doused with a decontaminant and confined to the Airstream until they returned to Texas, where they spent another two weeks in the Lunar Receiving Lab at the Johnson Space Center. The command module and moon rocks also underwent a 21-day quarantine. (The grim worst-case-scenario plan in the event of alien infection was to bury the entire lab and everyone in it under dirt and concrete; ultimately, the worst fate anyone suffered was boredom.)

Today NASA and its counterparts around the globe are making plans for how to protect Earth against any threats posed by returning Martian material. The rover Perseverance is currently hard at work collecting soil and rock samples to be retrieved by a future lander, and, through an elaborate chain of spacecraft handoffs, returned to Earth in a sterile biocontainment unit sometime around 2030—at which time the samples can finally be opened in an ultrasecure lab.

The plan is not without controversy. An International Committee Against Mars Sample Return, whose advisers include a member of NASA’s original Planetary Quarantine Advisory Panel, has lodged its objections, on the grounds the material may contaminate our world. Meanwhile, some scientists feel the elaborate measures are unnecessary. After all, meteorites likely still carrying traces of extraterrestrial material regularly arrive on Earth without incident. From this point of view, Earth and Mars are part of the same planetary quarantine “pod.”

Even during quarantine’s comparatively quiet years, some public health experts were crying out for its reform. Precisely because quarantine has been used so rarely in the United States since the 1918 flu pandemic, the plans, facilities, and laws governing it sat mostly frozen in time.

The threat of bioterrorism following 9/11, as well as SARS (severe acute respiratory syndrome) and Ebola outbreaks in the 2000s, prompted the United States government to revisit the question of how, when, and why federal quarantine might be used. In 2017, after more than a decade of work and study, the CDC adopted new quarantine regulations. (States also have their own quarantine powers.)

The new rules allow the CDC to detain people anywhere in the country, not just when they first enter the United States or cross state lines; broaden the list of illnesses that can be used to invoke quarantine; and institute new data-gathering rules for airlines. The regulations also outline the rights of the quarantined to challenge their detention—although some argued those protections weren’t strong or clear enough.

Quarantine is one of the rare situations where a government can detain an individual who has committed no crime and may never pose a health risk to anyone. It’s easy to imagine quarantine being used to dystopian ends: a government arbitrarily imprisoning masses of people on the grounds that they might be sick.

Even the CDC’s Martin Cetron—“the closest quarantine has to a poster child,” Manaugh and Twilley write—acknowledges the serious danger of its misuse. “One of the problems is that quarantine is used as a political tool in an overreaction to fear,” Cetron told them. “And that has given it a really bad name.”

Take Kaci Hickox, a nurse who treated Ebola patients in West Africa with Doctors Without Borders. When she returned to the United States through the Newark, New Jersey, airport in 2014, then-governor Chris Christie insisted she be quarantined upon arrival—despite the fact that Ebola is only contagious when a patient is symptomatic, and Hickox showed no signs of illness and returned a negative blood test. (Elsewhere in the United States, health workers who had treated Ebola patients had been allowed to return home and self-monitor for symptoms, as the CDC’s guidelines recommended.)

Hickox’s lawyer ultimately persuaded Christie to allow her to return home to Maine, where she was promptly issued a formal quarantine order that she challenged in court. A judge ruled that the state did not have evidence that Hickox’s quarantine was medically necessary.

Many at the time—including Manaugh and Twilley—couldn’t understand Hickox’s resistance. But to Hickox, who was not only a nurse but also a former fellow at the CDC’s Epidemic Intelligence Service, her situation was a perfect example of how quarantine can be deployed in ways that don’t actually reduce risk. It was a reaction to fear, not a reasoned public health effort, and it had real consequences: the public controversy deterred other health workers from volunteering to help with the Ebola crisis.

Hickox’s principled stance did not make her many friends. She received hate mail and death threats for months after her return. But she continues to believe she did the right thing. “She acted with such intentionality,” Twilley reflects, “almost deliberately sacrificing herself to try to make sure that this kind of thing didn’t happen to others.”

And she succeeded. Hickox sued New Jersey and, as part of her settlement, got the state to adopt new guidelines for people under quarantine, including the right to challenge the order.

“I’m a public health nurse, so I know that sometimes quarantine will be needed,” Hickox told Manaugh and Twilley. “But when we do it, we need to do it well and we need to think about that person as a human with a family and a livelihood and everything else.”

Her point, echoed by other public health leaders, including Cetron, was clear, Twilley says: “If you ask someone to make these sacrifices, you have a duty of care to them.”

Somber though it may be to contemplate, COVID-19 will not be the last pandemic. (In fact, many scientists believe we’ll see more zoonoses—diseases that jump from animals to humans—as the human population grows and spreads.) For some of us, it may not even be the last pandemic of our lifetimes.

With the wounds of this crisis still fresh (and indeed, still being inflicted), there comes a rare opportunity to take what we’ve learned and prepare for the future. “Before COVID-19, pandemic preparedness people felt like these lone Cassandras, bringing the tidings of bad news that seemed like the stuff of movies, not real life,” Twilley says. “I’m hoping that now that quarantine has become universal again, we could have those conversations more productively.”

At the end of Until Proven Safe, Manaugh and Twilley argue for a wholesale reimagining, including changes to the built environment that would make quarantine less disruptive to implement and less burdensome for the quarantined. “Thinking about the city as this flexible instrument for dealing with disaster would be really useful in a medical context,” Manaugh says. Cities could develop “pandemic modes”—closing down streets to allow pedestrian distancing, making crosswalk buttons touchless, and so on—that they toggle on and off as needed.

Just as we have tornado shelters in airports, we could build pandemic preparedness into publicly funded facilities such as schools, stadiums, and convention centers. Simple measures, such as making sure there are enough electrical outlets and bathrooms, could allow these spaces to be quickly converted into modern lazarettos.

We would benefit, too, from a return to the civic-minded, community-spirited ethic of quarantine in the past—a point emphasized by Patrick LaRochelle, an American doctor who treated Ebola patients in the Democratic Republic of the Congo. LaRochelle came to understand the potential trauma of forced separation firsthand when he had to quarantine in a biocontainment unit following an Ebola exposure. While he wasn’t bothered by the isolation himself, the experience helped him understand why his patients feared being kept from family and community as much as they feared Ebola itself.

“His insight, which was really interesting, is that the quarantine is never going to work if it doesn’t feel like a community experience,” Twilley says.

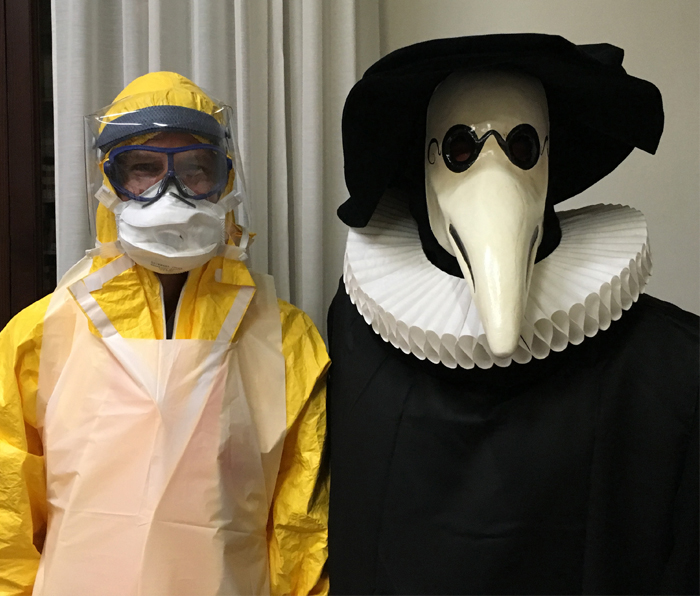

But it’s possible: in the United Kingdom, for example, Ebola-exposed patients quarantine in Trexler units—essentially, negative-pressure tents contained within hospitals. Rather than wearing full PPE, medical staff interact with the patient through suits built into the unit’s walls. Outsiders can learn to use these features with relatively little training, meaning that family members can visit a loved one in quarantine. During COVID-19, doctors whose faces were covered by masks and goggles began wearing smiling photos of themselves around their necks to help foster a sense of connection with patients (and look a little less ominous).

Simple acts like these matter, Manaugh and Twilley argue, and can make the difference between compliance with or avoidance of quarantine. “One of the most interesting design challenges is how to make quarantine, this thing where you are separating yourself, feel like an act of connection,” Twilley says.

Community mindedness also means guaranteeing “a basic social safety net,” Manaugh says, without which quarantine will invariably fail. Of course US business owners protested COVID-related restrictions; no one was offering a viable alternative for them and their employees to survive for a year without work. Just as the Venetians—and some countries today—shouldered the burden of financially supporting the quarantined, so must we.

Changing quarantine means changing ourselves. Individualistic Americans have long been resistant to seeing themselves as responsible for the care of others, yet in a pandemic there is no other option, Manaugh and Twilley argue: we can’t protect ourselves if we don’t care for those around us. As they write in Until Proven Safe, “we will never have public health if we do not think of ourselves as a public.”