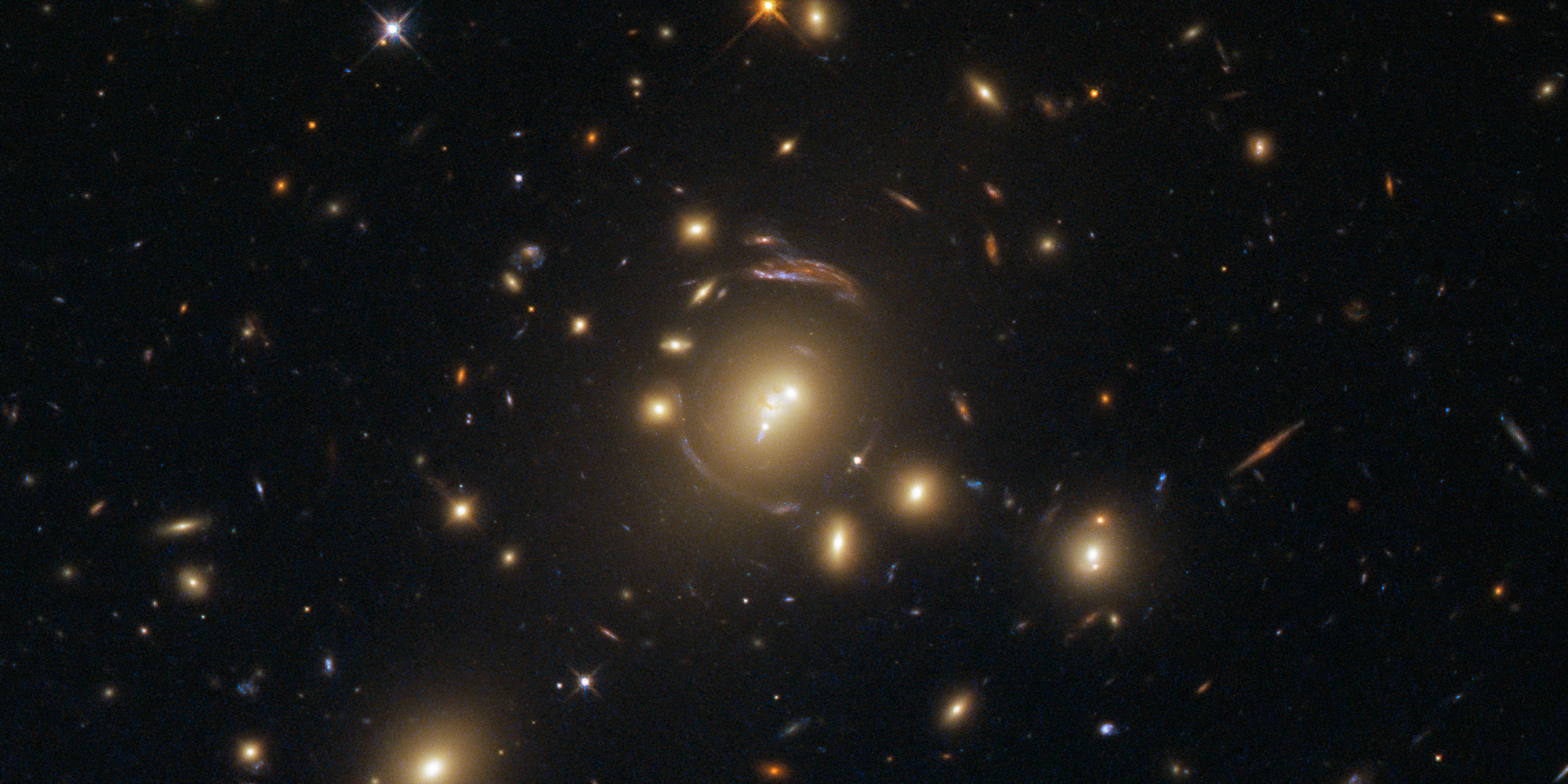

This image, captured by Hubble’s Wide Field Camera 3, shows light from distant galaxies bent into arcs, a distortion caused by a cosmic phenomenon called strong gravitational lensing. (Photo courtesy ESA/Hubble and NASA)

Astrophysicist Brian Nord looks for lenses through AI eyes.

Brian Nord is a visiting research assistant professor in the UChicago Department of Astronomy and Astrophysics, an associate scientist in the Machine Intelligence Group at Fermilab, and a senior member of the Kavli Institute for Cosmological Physics. He is a leader in the institute’s Space Explorers educational program for high school students and a cofounder of Deep Skies, a collaborative research group that applies artificial intelligence to astrophysics.

There have been two artificial intelligence winters—seasons of disappointment following a spell of hype where AI was touted as the solution to any number of problems but failed to deliver. We think we’re approaching another winter, but we hope it’ll be mild. The problem is that AI algorithms can do amazing things, but the how is not well understood. So I try to find simple questions within a complex problem that might help us better understand what AI can do.

One of the grandest mysteries of the universe is the dark sector. What’s the nature of dark energy (the hypothetical energy that pushes the universe apart)? What’s the nature of dark matter (the invisible matter inferred by its gravitational effects that holds the universe together)? Then there’s the related questions of how galaxies form and evolve. Solving any of those questions would lead to a better understanding of the others. One of my smaller, more focused efforts that helps investigate both the dark sector and the black box of artificial intelligence is improving the search for gravitational lenses.

There are regions of space that act like optical lenses. General relativity states that anything with mass—visible and dark matter—warps space-time. The fabric of space-time curving around an object explains gravity; this curvature also deflects light. So if one of these massive objects (anything as small as a planet to as huge as a galaxy cluster) is between an observer and a light source, the light will look distorted: maybe appearing multiple times (strong lensing), maybe looking stretched and magnified (weak lensing), maybe appearing just a little bit brighter (microlensing).

Historically, searching for strong gravitational lenses was done by manually looking at pictures. It worked because images weren’t that big. But as technology evolved, we got enormous images with hundreds of galaxies, and you’d scan and zoom, scan and zoom. It felt like our eyes were being misused. There’s got to be a better way. Starting in the 1990s, scientists began looking at the way digital pixels were connected, building image-based algorithms to help.

Then in 2016 scientists began using artificial intelligence neural networks to automatically scan images to find lenses. There’s still the challenge of training the networks, which means sometimes it’s still faster for humans to do the work. And even with the networks doing the heavy lifting, we still use our eyes to double-check the results.

Another challenge is that we need far more lens images than are currently available to train the neural networks, so we have to create convincing simulations. This is one reason physics is a great place to experiment with AI. We have already modeled much of the universe: You can write down the motion equations of a photon getting lensed. You can model what the lens and source look like and then add telescope noise. There are a small, distinct number of components to create a simulation, and we can fabricate images that can fool astronomers who have been looking at strong lens images for decades.

There’s a gotcha: Why not use AI to also make the simulations? I hope that my research will close that loop, creating advanced algorithms that can generate these images more quickly and intelligently.

Why do we want to identify so many lenses anyway? Each lens offers a little bit of information. You want to model as much as you can—to measure how intrinsically bright the object is, how much mass is in the dark halo (the inferred halo of dark matter that surrounds galaxies and galaxy clusters), and the distance between you and the object. These answers help map the mass distribution in the lens and, when combined with other lens models, how mass distribution has changed over time, helping determine how much dark matter and dark energy are there. With hundreds of lenses to model, you have statistical power that can help cosmologists understand how fast the universe is expanding.

The neural networks that seek out gravitational lenses—or create fake Yelp reviews or drive cars—are considered artificial narrow intelligence, or ANI, which is good at doing a single task. When people feel anxiety over AI—that fear of robot overlords or the rise of machines—it’s at least partially in reference to artificial generalized intelligence, or AGI: a machine that can understand its environment and reason the way humans do. Think 2001: A Space Odyssey’s Hal 9000 or Westworld’s android hosts. Whether consciousness is required for true AGI is up for debate.

I don’t think immediate alarm is warranted that AGI will enslave us in a Matrix-type scenario. But we should be paying attention because AGI could happen quickly or in a way we weren’t expecting, and there could be real repercussions for society. It’s not a stretch to compare the AI era we’re in now to the first half of the 20th century, when we were developing nuclear weapons. These are two highly disruptive technologies that, when wielded with sufficient power, will change large swaths of lives.

The fact that I’m trying to make these algorithms better means that I’m contributing in some way. I take that very seriously, so I hope to learn more about the ethics of AI and be a conduit for voices that prioritize those considerations.

There’s a confluence of science, ethics, and humanity—biases that seep into science conduct and design, perpetuation of those biases through purported objective research, effects on society that arise from technological advancement. They’re all connected.

We’re creating these things, these ever-evolving human facsimiles, in our own image, but what do we still not know about our own humanity? Suppose we do achieve AGI, and in truly human form. How will we treat them? Will we enslave them? James Baldwin talks about what oppression does to the oppressed, the oppressors, and the witnesses—the terror it unleashes on your soul.

We will have a better wrangle on the ethics of AI if we are better to each other as humans—pay better attention to how we view and treat one another. If we’re not paying attention to inclusion and how equitable our research spaces are, are we causing folly in the technical aspects of our research?

Diversity and inclusion were important considerations during the founding of Deep Skies, a collaborative community that brings together astronomy and AI experts to explore mutual benefits of large-scale data analysis. I cofounded it about a year ago with UChicago postdoc Camille Avestruz and Space Telescope Science Institute astrophysicist Joshua Peek.

Deep Skies started as a journal club, but we realized that it could be an environment for real work to be done, for research to happen in a new way. We develop partnerships with institutions across the world, learning from each other without having to reinvent the wheel. And we encourage open-source methodologies and accurate attribution for ideas and contributions while engaging in best practices to improve diversity in STEM (science, technology, engineering, and math) fields. We want to make sure that different voices are in the room.

We’re scientists. We’re physicists. We want to break things down to their fundamental causes and constituents, and fundamentally science is about humans. It’s about the people who do science and the people whom it serves. Emotional intelligence makes for better artificial intelligence. And being more human makes for better science.

Education, engagement, and empowerment

I don’t know what it means to do science without communicating it back to people. It feels inauthentic or insufficiently expressive for me to do one without the other. But my mission isn’t to make scientists. What I want to do is help people recognize their own power and agency to see that science is a tool for them, not just the purview of some special group.

When I was in high school planning to pursue physics, people would say, you’ll be able to do anything! Teachers and counselors make STEM out to be this magical Swiss Army knife that will set you up for the future, and I think that’s a bait and switch. It marginalizes the importance of art and language and literature—all those things that are also central to our humanity.

Space Explorers, a high school science enrichment program through the Kavli Institute for Cosmological Physics, could exist in any field, as long as it involves self-discovery, learning about the world and the student’s place in it.

But as it is a science program, we focus on projects that have practical and gaming elements. We’ve done mystery games to help students make hypotheses and test them out. We also do engineering projects, asking students to improve instrument or architectural concepts, learning about accuracy and precision and iterative design.

Public outreach is an important part of my career. I’ve given Ask-a-Scientist lectures, spoken at the Fermilab Family Open House, performed in the Fermilab Physics Slam, and given tours for Saturday Morning Physics.

But I’d like to find a better word than outreach—it creates a divide between those who do (science in this case) and those who don’t. This thing that we love, that we do to make the world a better place, gets distorted by putting ourselves in the center of it.